Well, my Pi hung last night, 2/12/2021 around 22:00, after running since 2/10/2021 at 9:20 – about 3 days.

TLDR; I don’t think the problem is being caused by NextPVR. The Pi seems to be leaking memory at the same rate regardless of whether or not NextPVR is enabled. More detail follows.

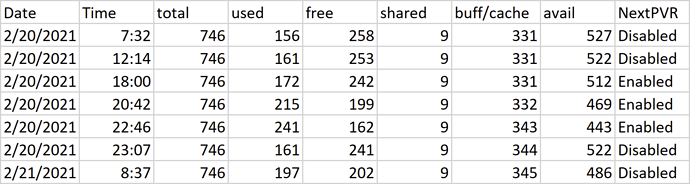

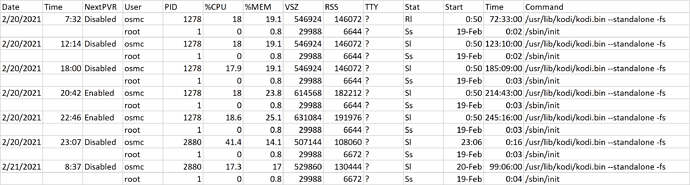

During the time I was evaluating the impact of NextPVR, I disabled NextPVR for most of the day, enabling it only for about 3-4 hours each day to watch some recorded TV. The pattern was to enable NextPVR around 18:00, then disable it around 22:00 each day. I ran free -m right before enabling NextPVR and right after disabling it, and once again in the morning when it was disabled and had been disabled all night.

My hypothesis going in was that I would see a drop in “available” memory after enabling NextPVR, and near-constant available memory while NextPVR was disabled. That was not the case. Instead, I saw constant dropping of available memory throughout the period, independent of whether NextPVR was enabled or not. The following chart shows results of free -m during the period:

Date Time Total Used Free Shared Buff/Cache Avail Notes

2/10/2021 9:20 Mem: 746 211 212 5 323 477 After disable NPVR and restart OSMC

Swap: 0 0 0

2/10/2021 18:30 Mem: 746 301 91 5 353 386 Before enable NPVR

Swap: 0 0 0

2/10/2021 22:00 Mem: 746 348 112 5 285 338 After disable NPVR

Swap: 0 0 0

2/11/2021 9:40 Mem: 746 443 56 5 247 246 NPVR still disabled

Swap: 0 0 0

2/11/2021 18:10 Mem: 746 467 27 5 251 222 Before enable NPVR

Swap: 0 0 0

2/11/2021 21:45 Mem: 746 524 49 5 173 166 After disable NPVR

Swap: 0 0 0

2/12/2021 10:00 Mem: 746 576 28 5 141 114 NPVR still disabled

Swap: 0 0 0

2/12/2021 19:00 Mem: 746 602 29 9 114 83 Before enable NPVR

Swap: 0 0 0

Apologies for the sloppy display of the table. I’m missing something on how to do that correctly.

In this table, the difference in available memory between “Before enable NPVR” and “After disable NPVR” on the same day represents the change in memory while NPVR was running. The difference between “Before enable NPVR” on a day and “After disable NPVR” on the previous day represents the change in available memory while the OSMC/Pi was idle with NPVR disabled. There were two observations of each. The drops while NPVR was running were 48M and 56M. The drops while OSMC/Pi was idle and NPVR was disabled were 116M and 83M. The totals were higher when OSMC/Pi were idle, but the time period was much longer. I conclude from this that NPVR is not responsible for the memory leakage I’m experiencing, but I’m probably missing something. In total my Pi leaked almost 400M or memory in just under 3 days.

I have Kodi debug logs for most of this period. I’ll post them if there is any interest. I didn’t try to save off kernel messages during this period as @JimKnopf advised. I will do so if that’s needed for a next iteration. Alternatively or in addition, I can post up full logs from “My OSMC” before the Pi runs out of memory.

I’ve been experimenting on a second possible cause of the memory leakage – the skin helper service. I installed and enabled that awhile back because I had designs on cleaning up the display of movies in my library. I didn’t manage to do that and don’t care to now, but I forgot to disable or uninstall the skin helper service. I’m wondering if that might be the problem. I’ve disabled it and I’m collecting results of free -m again. So far, results are tentative and inconclusive. I’ll post results I obtain in a couple days or whenever my Pi runs out of memory again. In the meantime, I’m open to any ideas, thoughts, and suggestions.

Thanks again for the support.