Hey everyone.

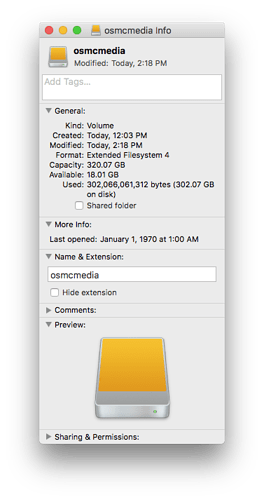

I’m puzzled. I’m running OSMC on my RPi 2, with all the media on an external USB drive plugged into the Pi. The drive is formatted as ext4.

There’s still like 15+ GB of free space on it, yet when I try to run mkdir it yells at me:

cannot create directory “name_of_directory”: No space left on device

How come?

Also, when I run df it gives me this:

Filesystem 1K-blocks Used Available Use% Mounted on

devtmpfs 370520 0 370520 0% /dev

tmpfs 375512 5100 370412 2% /run

/dev/mmcblk0p2 7068376 2195756 4490516 33% /

tmpfs 375512 0 375512 0% /dev/shm

tmpfs 5120 4 5116 1% /run/lock

tmpfs 375512 0 375512 0% /sys/fs/cgroup

/dev/mmcblk0p1 244988 24948 220040 11% /boot

/dev/sda1 307534284 289950488 1938904 100% /media/osmcmedia

tmpfs 75104 0 75104 0% /run/user/1000

How can /media/osmcmedia be 100% under Use%?

This is the output from df -i:

Filesystem Inodes IUsed IFree IUse% Mounted on

devtmpfs 92630 357 92273 1% /dev

tmpfs 93878 415 93463 1% /run

/dev/mmcblk0p2 457856 68237 389619 15% /

tmpfs 93878 1 93877 1% /dev/shm

tmpfs 93878 4 93874 1% /run/lock

tmpfs 93878 10 93868 1% /sys/fs/cgroup

/dev/mmcblk0p1 0 0 0 - /boot

/dev/sda1 19537920 1993 19535927 1% /media/osmcmedia

tmpfs 93878 4 93874 1% /run/user/1000

I read somewhere that it’s because I’m running of iNodes, but even if that’s true, I have no idea how to fix that. Plus, df -i strangely reports 1% for /media/osmcmedia - shouldn’t it report 100% if there were no iNodes left on /media/osmcmedia? And, df reports a 100% use when there are 15+ GB left on the drive, which is strange, too.

Any help would be appreciated.

?

?