I’m not so sure from your posting history here that I’d say its worked without issue.

Your correct.

Let me be more accurate.

It has worked without issue while using NTFS.

As soon as I use ext4, I’m on here looking for solutions for random problems.

Maybe ext4 isn’t a very suitable file system for removable media, who knows.

Right… That’s why I always have problems with my 7 USB EXT4 drives. NOT. It’s not EXT4 that’s your problem. You are just pushing the hardware to far.

My drives are connected to a WD MyCloud via a USB3 hub. I did start to see odd problems with that, so I’m now migrating to a proper NAS solution. But… I did find that some of the problems were in fact caused by a failing drive. And the ‘failures’ were not only noticed on a single drive, so it took me some time to narrow it down to a single drive. Once I found the faulty drive, My odd problems cleared up.

Which colour port is the first drive in?

This sounds like a recipe for disaster as you are introducing not just one, but two single points of failure. The first one being the single USB port, the second being the USB port of the primary serving device.

If you have one bad drive/controller/USB cable in a single drive in-front, you start to get a serious problem.

As a hunch, I’d blame cabling, but the whole setup doesn’t sound good at all. Buy a NAS for better reliability; or even put those drives in a desktop computer and share them over the network.

The problem may not be the hardware, but it certainly won’t be ext4.

The problem is the configuration of hardware. OP would be better off running each drive off a powered USB hub than running it in the current configuration because:

- Complete drive failure (which seems to be the case here) would be isolated down to either the parent hub, connected socket or cable being the culprit, as all drives failing simultaneously would be extremely unlikely.

- Individual drive failures could also be isolated to socket or USB cable if they acted out on their own.

Presently, OP has a lot of work to do to work out drive ordering and where in the chain his disks are failing.

I’ll confess, it sounds shady, it really does, BUT IT WORKS.

The only drives that had issues when scanned were drive 2 and 3 which I did a file move from 2 to 3 using the Vero’s file manager last night.

Then when trying to move a file to drive 2, over the network, the Vero refused.

So I sent it to drive 4 with no issue.

Then tried sending it from 4 to 2 using the Vero and it refused.

Used my laptop and the file moved without question.

Then ran e2fsck and found issues on 2.

Check the others and found issues on 3.

So I think everything is fine for now, just something went weird with that file move.

I’m definitely going to keep an eye on everything that’s for sure.

And I don’t think its a file system issue, I’m just being snarky.

Getting an external enclosure like the one I linked to maybe better, but all that is is a hub and case for 5 drives as opposed to a hub on a hub on a hub etc.

HDDs on white port, dongle on black.

Swap them.

Full logs needed.

Why? What’s the point?

We’re trying to solve issues, not have a pissing contest about filesystems. It just detracts from actually solving your problem and costs us and you time explaining why we don’t believe the filesystem is the problem. The fact is that your logs so far show block level failures, which are above filesystem level.

Log in first post

Will do.

Why would that matter though?

Why not just pick up cheap, used desktop and put something like LM or Ubuntu on it? That’s what I’ve done. It doesn’t have to be real powerful to act as a fileserver.

It was a friendly response to:

I moved the drives to the black USB port.

I will unmount and run e2dsck daily on each drive one by one and keep an eye on them.

If I was smart I could probably do some cron job and script to have it run automatically.

I REALLY APPRECIATE EVERYONE’S INPUT.

Daisy chaining your drives might well be the issue. If they’re being chained 1-to-1, the poor “tail-end Charlie” in the chain has to communicate through seven USB controllers just to get to the Vero. Then there’s the return portion of the trip to think about…

I see that the Backup Plus devices have one USB port on the back and two on the front. As a stop-gap, I’d suggest that you try connecting disks to both front ports of each disk. This will reduce the depth of USB controllers. Also, as @bmillham suggested, spread them across the two Vero ports and place the dongle on one of the disks.

By default - when mounting a ext4 filesystem under linux, if the clear flag is not set, it performs an fsck and that one is pretty fast using the journal (default in ext4). So it was fixed before you performed the copy operation.

I definitely agree with @dillthedog

Never daisy-chain disks through various other HUB’s. Connect them all to ONE hub so they all run on the same.

By default, I use USB only for temporary backup. Never for a permanent. I had my fair pair of issues in the past and since then - large storage goes into a NAS and is connected through NFS. Period.

Why would a NAS be less likely to have HDD failures than DAS?

I don’t see how Ethernet v USB would make any difference.

I remux all my files on my Win10 machine on a Samsung SSD and when I’m done I attach a CRC to the end of the file name.

I have transferred over 100TBs of data using USB and have never once had a file fail a CRC check after being moved.

Likewise, I haven’t had any issues with ethernet either.

Obviously a daisy chain introduces more variables and points of failure so I understand the concern people have with that, I get it.

BUT, if the file is moved and passes a CRC check and from that point on it’s only ever read by the Vero, I don’t really see many situations for an issue with the file.

As for the hard drives themselves, failure rates should be the same I’d imagine.

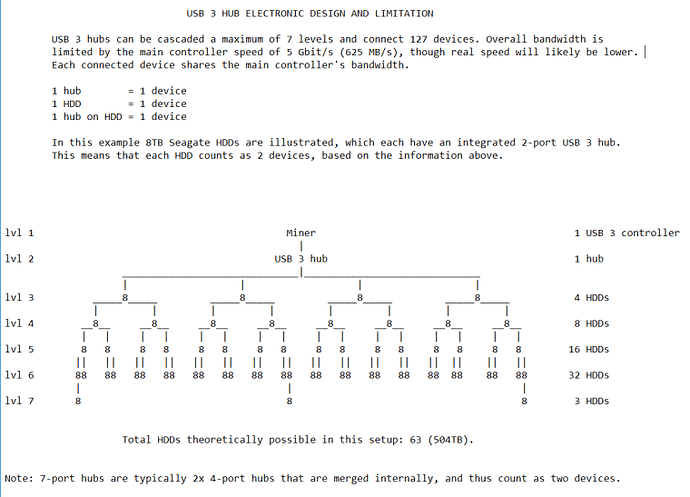

You seem to miss one important thing on that chart. It’s assuming that you have the hub connected to a USB3 port. And it points out that the speeds will be likely lower.