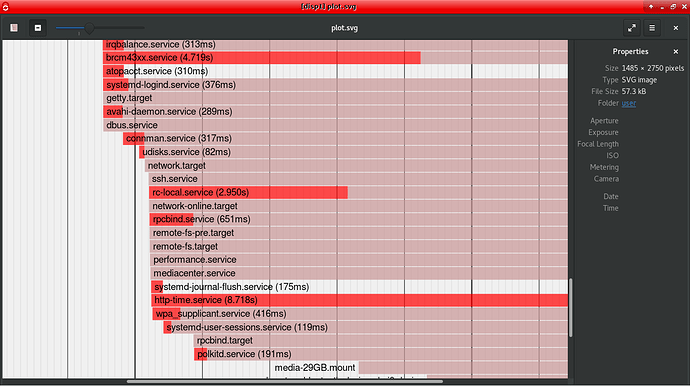

You will get better performance out of remote shares if they are mounted in OSMC’s filesystem. You can do this by putting lines in fstab as described here. But there have been reports of time-outs when OSMC is shutting down. This is probably because systemd is stopping services in the wrong order. Using systemd to mount, rather than fstab, gives us greater control. Here’s how.

We are going to share a folder on server 192.168.1.11 which has a share name of ShareFolder and needs username yourname and password yourpassword and we are going to mount it at /mnt/ShareFolder. Your server IP address will be different. You can use any names you like for the share name and mountpoint. They don’t have to be the same but they must not have spaces in them. The systemd unit files we are going to create must have the same name as the mountpoint, ie /mnt/ShareFolder → mnt-ShareFolder.mount.

First make sure you can access the share from Kodi or by using smbclient as described in the above Wiki article.

Open a commandline on your OSMC device as described here. Then go:

cd /lib/systemd/system

sudo nano mnt-ShareFolder.mount

Now enter the following lines:

[Unit]

Description=Mount smb shared folder

Wants=connman.service network-online.target wpa_supplicant.service

After=connman.service network-online.target wpa_supplicant.service

[Mount]

What=//192.168.1.11/ShareFolder

Where=/mnt/ShareFolder

Type=cifs

Options=noauto,rw,iocharset=utf8,user=yourname,password=yourpassword,uid=osmc,gid=osmc,file_mode=0770,dir_mode=0770

[Install]

#nothing needed here

Save the file with Ctrl-X, then:

sudo nano mnt-ShareFolder.automount

and enter these lines:

[Unit]

Description=Automount smb shared folder

[Automount]

Where=/mnt/ShareFolder

[Install]

WantedBy=network.target

Save that file then go:

sudo systemctl enable mnt-ShareFolder.automount

sudo systemctl daemon-reload

You can now test mount the share with:

sudo systemctl start mnt-ShareFolder.automount

ls /mnt/ShareFolder

You should see the contents of 192.168.1.11/ShareFolder listed. If all is good, add the share as source in Kodi (browse to Root filesystem then /mnt/ShareFolder) and remove the corresponding source which starts smb://.

If you were using fstab, delete the relevant lines. It also works with nfs - your [Mount] part will look something like this:

[Mount]

What=192.168.1.1:/media/NASdrive

Where=/mnt/NAS

Type=nfs

Options=noauto,rw

and the [Automount]:

[Automount]

Where=/mnt/NAS

the rest is all the same as for smb (aka cifs). For this example the unit files will be called mnt-NAS.mount and mnt-NAS.automount.

Checking if there are remote shares in /etc/fstab is probably a step in the right direction but I think that it goes beyond that. There are potentially many use-cases where we want

Checking if there are remote shares in /etc/fstab is probably a step in the right direction but I think that it goes beyond that. There are potentially many use-cases where we want