Why does Vero V only ever display RGB 4:4:4 and 12bit as the HDMI output signal?

No matter what bit rate the video has.

How can I switch to RGB 4:4:4 and 10bit or RGB 4:4:4 and 8bit?

To get a better understanding of the problem you are experiencing we need more information from you. The best way to get this information is for you to upload logs that demonstrate your problem. You can learn more about how to submit a useful support request here.

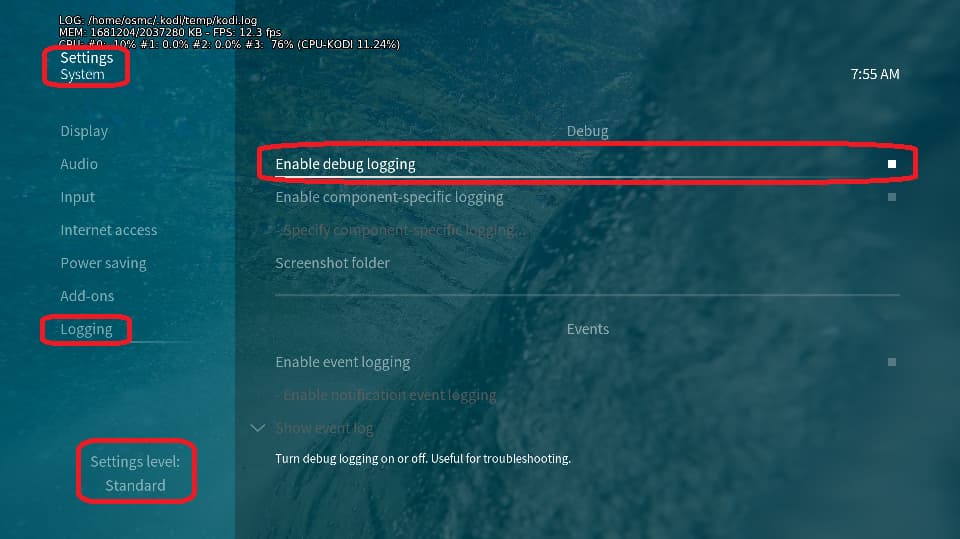

Depending on the used skin you have to set the settings-level to standard or higher, in summary:

-

enable debug logging at settings->system->logging

-

reboot the OSMC device twice(!)

-

reproduce the issue

-

upload the log set (all configs and logs!) either using the

Log Uploadermethod within the My OSMC menu in the GUI or thesshmethod invoking commandgrab-logs -A -

publish the provided URL from the log set upload, here

Thanks for your understanding. We hope that we can help you get up and running again shortly.

OSMC skin screenshot:

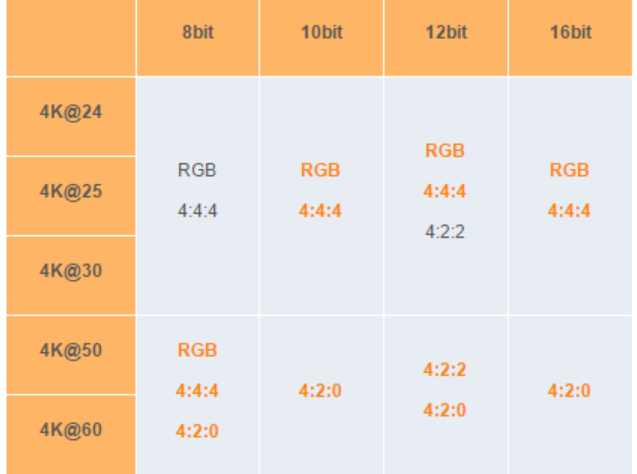

That’s the correct HDMI specification here, isn’t it?

So the Vero V always outputs (almost) all videos in RGB 4:4:4, right?

But at what colour depth does the Vero V output the videos?

Always with 12bit, or depending on the video also in 8bit and 10bit.

Maybe @sam_nazarko can answer my question.

If it really is an error, then I can of course create a log file.

No. Vero V normally outputs YUV 4:4:4 10 bits if your display supports it, otherwise 8 bits, or if your display doesn’t support YUV, RGB 8 bits. In that table 4:4:4 means YUV.

Why do you think you are getting RGB 12-bits?

Because the Yamaha AVR RX-A6A and the Samsung (8K TV) display it.

AVR and TV have HDMI 2.1.

It certainly doesn’t look right. Please post logs so we can see what’s happening.

Thank you. Can you please re-boot and post logs again? Those logs cover several days.

I can’t see anything in there to suggest Vero is sending RGB. When you start a video we get:

[ 165.333703] hdmitx: video: VIC: 3 (93) 3840x2160p24hz

[ 165.333708] hdmitx: video: Video output

Bit depth: 10-bit, Colourspace: YUV444

Colour range RGB: limited, YCC: limited

[ 165.333710] hdmitx: video: Gamut

Colorimetry BT709

Transfer SDR

Then after a short while, HDR is recognised and the Colorimetry and Transfer change to BT2020 and PQ:

[ 165.834751] hdmitx: hdmitx_set_drm_pkt: tf=16, cf=9, colormetry=0

[ 165.834797] hdmitx: video: Colorimetry: bt2020nc

[ 165.834799] hdmitx: video: HDR data: EOTF: HDR10

[ 165.834801] hdmitx: video: Using master display data from frame header

[ 165.834813] hdmitx: video: Master display colours:

Primary one 0.1700,0.7970, two 0.1310,0.0460, three 0.7080,0.2920

White 0.3127,0.3290, Luminance max/min: 1000,0.0001

[ 165.834817] hdmitx: video: Max content luminance: 0, Max frame average luminance: 0

[ 165.835099] hdmitx: vid mute 20 frames before play hdr/hlg video

all as expected. Are the colours on the Samsung what you expect?

If I’m not mistaken HDR10 only does 10 bit.

In order to do 12 bit you would have to have HDR10+ or Dolby Vision content and obviously everything within the chain from content to display would need to support that too.

Yes. The clue is in the name ![]() . I’m not aware that HDR10+ can supply 12 bits or that any consumer TV can actually use the extra 2 bits.

. I’m not aware that HDR10+ can supply 12 bits or that any consumer TV can actually use the extra 2 bits.

And other formats like DV might allow 12bit content and output, but they’re currently not utilizing that capability.

You sure? AIUI, profile 7 with a FEL is equivalent to at least 12 bits, and LLDV can be 4:2:2 which is capable of 12 bits. Whether studios are mastering at 12 bits - who knows?

The 12 bit thing is a bit confusing/deceiving as there are no consumer 12 bit panels, but it’s not just 10 bit color v 12 bit color that we’re talking about here.

The bits are used differently depending on the content, especially by DV.

Either way, in regards to the OP it just seems like 12 bit is being misreported by his equipment.

Is this maybe the AVR stating what the output supports rather than what is actually being output?

Thanks a lot for your answers.

No, sorry, but you misunderstand me.

The videos are ISO, in FHD or UHD.

My question is, how does the Vero V determine the color space that is transmitted to the AVR → TV?

I prefer that the Vero V only uses YCbCr as the color space and not RGB.

My AVR and my TV can use YCbCr 4:4:4 with a maximum of 12bit.

Where can I change the color space of the Vero V from RGB to YCbCr and the color depth to 8bit or 10bit?

The default colourspace is YCC 444, 10 bits. Vero will output RGB only if

- the display does not support YCC

- a VESA display mode such as 1024x768 is selected, or

- the user selects RGB output in Settings->Display (visible in Expert mode)

Vero will output YCC 422 if:

- the display’s HDMI cannot handle the output mode at 444, or

- the user selects 422 output in Setiings->Display

Vero will output 420 if the output mode is 4k50/60hz and 10 bits.

Vero will output 8 bits only if the display does not support 10 bits and the output is RGB.

As I posted above, there’s no indication in your logs that Vero is not outputting YCC 444 10 bits. Note that the colourspace of the source media is irrelevant to what Vero outputs. If you are playing 8-bit video, IIRC Vero dithers the extra 2 bits when outputting 10 bits.