While it also doesn’t help that the Debian stable version of smartctl is 2 years old.

Anyhow while I thought this would be the easiest with the Vero if the smart programm on your windows machine worked with the enclosure maybe just go back to that and initiate a test there.

Yeah, and from Windows it says everything is fine.

So it sounds like Linux just botched it’s own filesystem up.

The drive itself is appears fine.

I think you may be getting a bit confused between reading SMART data and running the drive self test. That Linux program can do both. If you download the OEM tool in Windows from your drive manufacturer that will have an option to run a short and long drive self test like what you were trying to do with the vero.

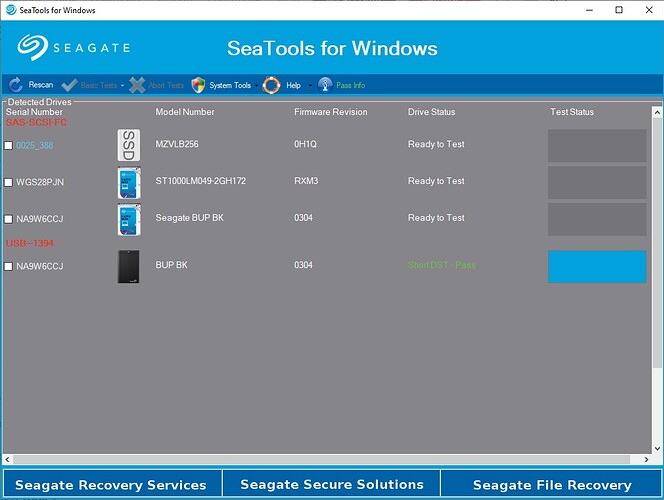

Did you run the drive self test with Seatools. The long drive test should have taken a very long time.

EDIT: The short drive test is good for the quick check so you don’t waste time. I would still run the long drive test just to be sure.

Just ran the short test.

According to linux it’s an issue with the file system:

Seagate5TB contains a file system with errors, check forced.

Well that one we know. The filesystem is corrupted therefore you should reformat that partition.

We just wanted to ensure that the drive is really healthy and therefore suggested the long smarttest.

If you believe the disk is fine just format the partition and start using it.

Yeah, everything is already backed up so might as well.

Good ole Linux.

I figured the filesystem would be more resilient.

In all of my years of using Linux, I’ve never had EXT4 get corrupted. I’d suspect that it’s because you formatted the drive with all of those custom settings without fully understanding what may happen. When you reformat it, I’d strongly suggest that you use the default options. They are default for a reason.

Seriously?

So now linux can’t handle being customized?

If using mkfs.ext4 /dev/sda1 -T largefile4 -m 0 -L Seagate5TB is too much customization for Linux to handle than that’s pretty weak.

But I doubt that’s it.

Well, you and one other person who used those options seems to be having problems.

I bet there is a way you can customize a NTFS drives formatting that would also cause problems.

You can customize ANY system to the point of making it un-usable.

So you really think that bringing the inodes count down from default was the culprit?

With my configuration I had 2,000,000 inodes on an 8TB HDD, by the time it was full the filesystem only used 614 inodes, so there was still 1,999,385 inodes left even with my crazy customization.

You can’t possible think that using a smaller inodes count caused such an issue.

I think it must have been that the drive became unplugged while it was still mounted.

Probably by one of my little ones.

I either ssh in and umount the drive or power down the entire Vero.

Windows gets all but hurt if you yank a drive out without ejecting it and if the OS is writting to it then yeah, it would have corrupted files obviously.

Even still, it would allow you to plug it in and explore, read, and write to the disk.

But in this case Linux wouldn’t even let me mount the drive.

Sounds like in this area ext4 has all it’s eggs in one basket.

Reminds me of new cars that fail to start because a sensor has malfunctioned, even though mechanically the car is sound.

I’ve had great experiences and bad experiences with all OS’ and file systems.

I just don’t understand why people feel the need to pick a side and defend it so religiously.

Linux has it’s flaws.

Ext4 has it’s flaws.

Windows has it’s flaws.

NTFS has it’s flaws.

Let’s not pretend that these things are perfect.

PS: Thanks for all the help everyone!

Even though we were unable to get it fixed without wiping the drive, it was an educational process none the less.

It sounds like you may be taking things a bit more than intended with the last few posts. When your trying to troubleshoot you have to look at the evidence you have available and work forward from there. The fact is that as far as we know the only people who followed your exact steps are you and the other person who just posted a very similar thread with a very similar issue. What’s more is the type of corruption you both had appears to be very rare. It would be assumed that there was not a physical fault in both cases because of statistics (although we are still going to recommend to check as that is good practice). There is enough people running ext4 formatted drives with OSMC and not reporting this issue that it should be safe to rule out that format in and of itself. So if we look at the information available where else should we be looking for a source of the root cause?

There is nothing wrong with what you did or why you did it. Obviously the ext4 spec allows for it. That does not mean that there is not something running in OSMC that doesn’t have a bug that is only triggered with something in the way you setup your drive. I know there have been programs in the past in Windows that had problems if you used anything other than a 4k cluster size with NTFS.

The end goal of the support staff here is for everyone to have a perfectly working system. Giving a known working recommend is not out of line.

Well, all USB enclosures I have with such error output from smartctl I can convince to work with the -d SAT or ATA option using the tool.

Suggest you try it again and first bring the informational -ato work, so what is the output of each

sudo smartctl -a -d scsi or sat or ata with your hdd device (don’t use the numbered device name).

Don’t try to invoke a test run before it’s clear what device type to use.

That just made me think of something. I wonder if it’s not a problem with the enclosure?

I have a USB dock style ‘enclosure’ and I’ve had several drives that just did not play nice with the dock. It may be that your enclosure just doesn’t play nice with the drive, or maybe it’s even something with EXT4 that it doesn’t like.

I have a UHD friendly Bluray drive in an enclosure that refuses to read DVDs. I can rip UHD and Blurays with no probems, but the enclosure just can’t handle DVD.

That is an interesting link as the other person also reported he was using a seagate drive.

Well, the enclosures are what they come in.

I’m not purchasing a bare drive and putting it in a third part enclosure.

It could be the enclosure I suppose, but a have no way to test that since I don’t plan on cracking it open.

Here’s my exact drive:

I also have a Blue 4TB and thre 8TB drives with the built in hubs.

I already wiped the drive and am in the middle of restoring the contents.

When it’s complete though, I’ll try those smartctl commands and see what happens.

But honestly at this point, the fact that it mounts kind of changes too much so I’m not sure those new commands would really be conclusive even if they work.

@Kontrarian its not our fault for your failing drive i suspect that the smart data will show that is prefail

but hey its kinda like this when windows users dont have a clue they blame linux for their own short comings