I’ve built a number of NAS/DAS setups over the decades and like to think I’ve learned a few lessons. Here they are:

- Never connect primary storage with USB connectors. They become lose and disappear from time to time. For backup storage, this isn’t THAT bad, but for primary storage, it will cause outages and corruption. Just. Don’t.

- Prefer eSATA, SATA, or any connection type with screws - like infiniband These are built specifically for external connections and vibrations.

- Drive cages are available that hold 4 3.5" disks, but fit into 3x5.25" slots in a standard mid/full tower case. Any cheap case can be used that has a little drive connector for eSATA-pm to SATA for normal devices.

- I don’t know how anyone would connect a SATA/eSATA to a non-Intel/AMD motherboard, but I suppose it can be done.

- I suppose USB 3.2 would provide 10Gbps connections in theory, but do any SBCs have USB 3.2 yet? That is typically visually implied by a “red” tab inside the USB slot. The male connector is still prone to vibration disconnects.

Some personal hardware:

I’ve used an 4 drive Addonics external array - it is very old now, but new it was $100.

I’ve used some cheap plastic Roswell hot-swap cages, ~ US$50. I have 1 in two of my systems. These are NAS + virtual machines, upgraded from a 65W Dual Pentium to a 65W Ryzen 5 a few years ago. It is amazing what a $300 upgrade can achive in a desktop. I also run Jellyfin on one of those systems, which provides the DLNA/NAS for Kodi on my playback devices around the house.

I’ve used a US$30 steel cage for 4 drives in one of my x86/64 systems. Added a quality LSI SAS 2-port HBA for the extra internal SATA connections. Performance is pretty great. 2 SAS connectors supports 8 SATA HDDs.

I’ll get some Amazon links (no referrer)

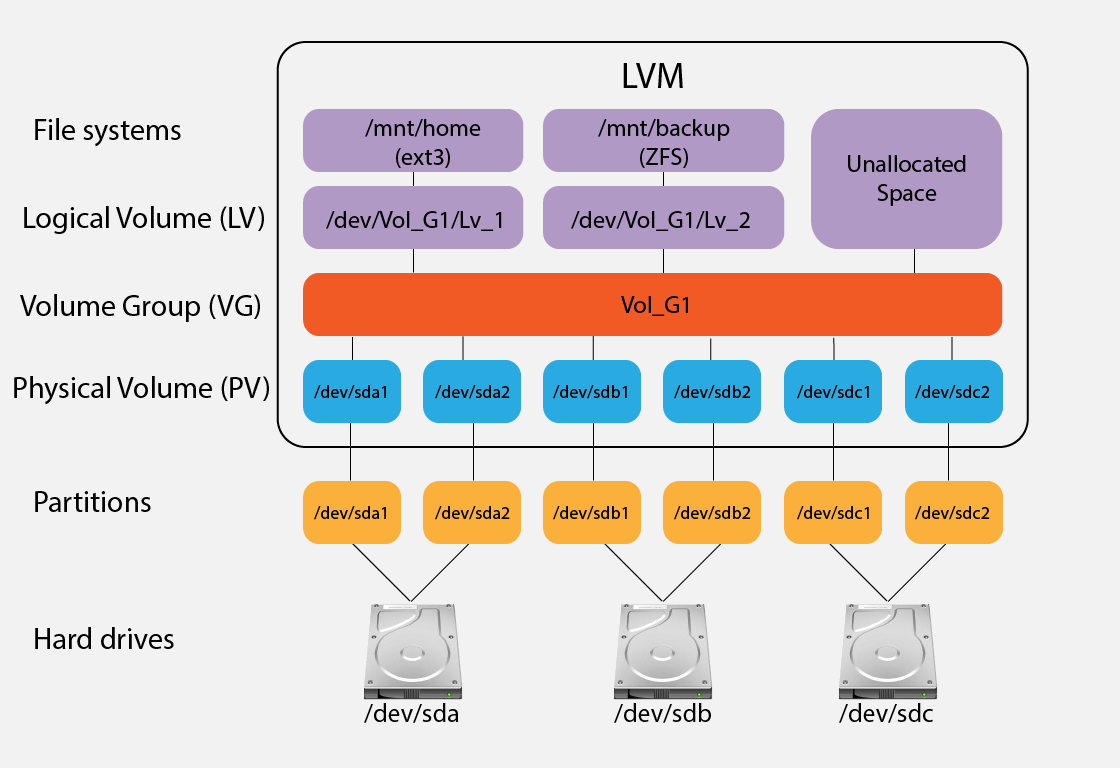

As for NAS/DAS file systems. If there will be Linux controlling access, then use a native Linux file system - that would be either ext4 or xfs, in general, for noobs to storage architecture. Consider using LVM or ZFS if you are intermediate level. Regardless, backup design needs to be part of any storage effort. Important files need 2 copies, minimal. Critical files need 3 copies with 1 of them stored in a different region away from natural disasters that would impact one location, but not the other.

For access over the network, use NFS for all Unix-based OSes. There’s just something about NFS that makes it better for not just streaming, but general use.

Use Samba only for MS-Windows client systems. Beware that MSFT has been drastically changing their implementation of CIFS in incompatible ways, so the Samba guys are always playing catch up. Depending on the different MS-Windows client versions, you can have Samba setup to provide the best performance and security with the newer protocol versions. Win7 used CIFS v2.1, so if you have Win10 or later, you should default to CIFS v3+. Alas, the way that MSFT systems “find” samba and other servers on the LAN has changed with Win10 and later. They use mDNS and ZeroConf/Bonjour now, not the old nmbd broadcast method. There are special services that need to be installed, configured and run on Linux for MS-Windows not to need an IP address to find them.

If you use LVM, be cautious about spanning across physical storage devices to create a single file system. Data loss when doing this is common.

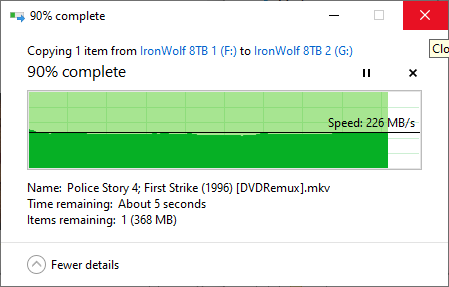

RAID really doesn’t have any place in a home media environment. RAID solves 2 issues - high availability and, maybe, slightly better performance. It never replaces backups which can solve hundreds of issues, including a failed RAID setup. Backups are far more important than any RAID.

That’s probably more than enough to help and confuse.